Life Beyond Hadoop

Sunday, February 9, 2014 at 3:22PM

Sunday, February 9, 2014 at 3:22PM

~ Prince

"Yet, for all of the SQL-like familiarity, they ignore one fundamental reality – MapReduce (and thereby Hadoop) is purpose-built for organized data processing (jobs). It is baked from the core for workflows, not ad hoc exploration."Why the Days are Numbered for MapReduce as we Know It

I first started with Big Data back in 2008, when CouchDB introduced itself as New! and Different! and offered live links to the Ruby on Rails development that we were doing. It worked, but it couldn't easily explain why it was better than the MySQL and PostgreSQL that we were using. By 2010 I hired (and got brilliant work from) Cindy Simpson and build a web system backed by MongoDB, and it was great for reasons we could understand, namely:

- It could handle loosely structured and schema'd data

- Mongoid and MongoMapper give it a nice link to Ruby on Rails

- It was straightforward step beyond its more SQL-y cousins

- Binary JSON (and the end of XML as we knew it)

I wrote about it here (NoSQL on the Cloud), and followed that writing with other notes on Redis, Hadoop, Riak, Cassandra, and Neo4J before heading off for the Big Data wilds of Accenture.

Accenture covered every kind of data analysis, but everyone's love there was All Hadoop, All the Time and Hadoop projects produced a lot of great results for Accenture teams and customers. Still, it's been 5 years since I started in Big Data, and it's more than time to take a look to see what else the Big Data world offers BEYOND Hadoop. Let's start with what Hadoop does well:

What Hadoop / MapReduce does well:

- ETL. This is Hadoop's Greatest hit: MapReduce makes it very easy to program data transformations, and Hadoop is perfect for turning the mishmash you got from the web into nice analytic rows-and-columns

- MapReduce runs in massively parallel mode right "out of the box." More hardware = faster, without a lot of extra programming.

- MapReduce through Hadoop is open source and freely (as in beer) licensed; DW tools have recently run as much as $30K / terabyte in licensing fees

- Hadoop has become the golden child, the be-all and end-all of modern advanced analytics (even where it really doesn't fit the problem domain)

These are all great, but even Mighty Hadoop falls short of The Computer Wore Tennis Shoes, 'Open the pod bay door, HAL', and Watson. It turns out that there are A LOT of places where Hadoop really doesn't fit the problem domain. The first problems are tactical issues — things that Hadoop might do well, but it just doesn't:

Tactical Hadoop Issues:

- Hadoop is run by a master node (namenode), providing a single point of failure.

- Hadoop lacks acceleration features, such as indexing

- Hadoop provides neither data integrity nor data provenance, making it practically impossible to prove that results aren't wooden nickels

- HDFS stores three copies of all data (basically by brute force) — DBMS and advanced file systems are more sophisticated and should be more efficient

- Hive (which provides SQL joins, and rudimentary searches and analysis on Hadoop) is slow.

Strategic Hadoop Issues:

Then there are the strategic issues — places where map and reduce just aren't the right fit to the solution domain. Hadoop may be Turing-complete, but that doesn't mean it's a great match to the whole solution domain, but as the Big Data Golden Child, Hadoop has been applied to everything! The realm of data and data analysis is (unbeknownst to many) so much larger than just MapReduce! These different solution domains were once though limited and as the first paper on them revealed 7 of them, so they were referred to as the "7 Dwarfs."

More have been revealed since that first paper, and Dwarf Mine offers a more general look at the kinds of solutions that make up the Data Problem Domain:

- Dense Linear Algebra

- Sparse Linear Algebra

- Spectral Methods

- N-Body Methods

- Structured Grids

- Unstructured Grids

- MapReduce

- Combinational Logic

- Graph Traversal

- Dynamic Programming

- Backtrack and Branch-and-Bound

- Graphical Models

- Finite State Machines

These dwarves cover the Wide Wide World of Data, and MapReduce (and thus Hadoop) are merely one dwarf among many. "Big Data" can be so much bigger than we've seen, so

Let's see what paths to progress we might make from here…

If you have just some data: Megabytes to Gigabytes

- LinAlg — the old Fortran linear algebra routines are one of my favorite solutions for solutions that look like Big Data but are really Fast Data. The article referenced above: SVD Recommendation System in Ruby is a great example of one of the greatest hits of the Big Data era — Recommendation Engines — explained and modeled with LinAlg and a little Ruby program.

- Julia — Julia is a new entry in the systems-language armory for solving about anything with data that may scale to big. Julia has a sweet, simple syntax, and as the following table shows it is already blisteringly fast:

Julia is new, but it was built from the ground up with support for parallel computing, so I expect to see more from Julia as time goes by.

If you have kind of a lot of data: up to a Terabyte

Parallel Databases

Parallel and in-memory databases start from a world (RDBMS storage and analytics) and extend it to order-of-magnitude great processing speeds with the same ACID features and SQL access that generations have already run very successfully. The leading parallel players also generally offer the following advantages over Hadoop:

- Flexibility: MapReduce provides a lot more generality in what can be performed by the programmer and and almost limitless freedom, as long as you stay in the map/reduce processing model and are willing to give up intermediate results and state. Modern database systems generally support user-defined functions and stored procedures that trade freedom for a more conventional programming model.

- Schema support: Parallel databases require data to fit into the relational data model, whereas MapReduce systems allow users to free format the data. The added work is a drawback, but since the principal patterns we're seeking are analytics and reporting, that "free format" generally doesn't last long in the real world.

- Indexing: Indexes are so fast and valuable that it's hard to imagine a world without indexing. Moving text searches from SQL to SOLR or Sphinx is the nearest comparison I can make in the web programming world — once you've tried it you'll never go back. This feature is however lacking in the MapReduce paradigm.

- Programming Language: SQL is not exactly Smalltalk as a high-level language, but almost every imaginable problem has already been solved and a Google search can take even novices to some pretty decent sample code.

- Data Distribution: In modern DBMS systems, query optimizers can work wonders in speeding access times. In most MapReduce systems, data distribution and optimization are still often manual programming tasks

I've highlighted SciDB/Paradigm4 and VoltDB in the set above, not (only) because they are both the brainchild of Michael Stonebraker, but because both he and they have some of the best writing on the not-Hadoop side of the big data (re)volution.

Specific Solutions: Real-time data analysis

- Spark

- Spark is designed to make data analytics fast to write, and fast to run. Unlike many MapReduce systems, Spark allows in-memory querying of data, and consequently Spark out-performs Hadoop on many iterative algorithms.

- Storm

- Storm is a distributed realtime computation system. Similar to how Hadoop provides a set of general primitives for doing batch processing, Storm provides a set of general primitives for doing realtime computation.

- MPI: Message Passing Interface

- MPI is a standardized and portable message-passing system designed to function on a wide variety of parallel computers. The MPI standard defines the syntax and semantics of a core of library routines useful to a wide range of users writing portable message-passing programs in Fortran or the C programming language.

Specific Solution Types: Graph Navigation:

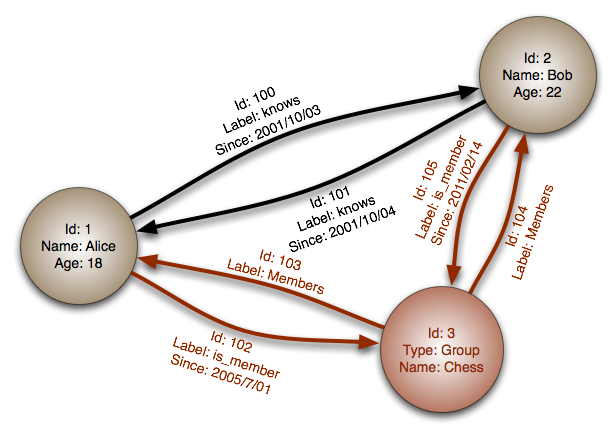

I've written about Graph databases before (Graph Databases and Star Wars), and they are the most DSL-like approach to many kinds of social networked problems, such as The Six Degrees of Kevin Bacon. The leaders in the field (as of this writing) are:

Specific Solution Types: Analysis:

Hadoop is great at crunching data yet inefficient for analyzing data because each time you add, change or manipulate data you must stream over the entire dataset

- Dremel

- Dremel is a scalable, interactive ad-hoc query system for analysis of read-only nested data.

- Percolator

- Percolator is a system for incrementally processing updates to a large data sets. By replacing a batch-based indexing system with an indexing system based on incremental processing using Percolator, you significantly speed up the process and reduce the time to analyze data.

- Percolator’s architecture provides horizontal scalability and resilience. Percolator allows reducing the latency (time between page crawling and availability in the index) by a factor of 100. It allows simplifying the algorithm. The big advantage of Percolator is that the indexing time is now proportional to the size of the page to index and no more to the whole existing index size.

If you really do have lots of data: Terabytes — but want something other than Hadoop

- HPCC

- HPCC enables parallel-processing workflows through Enterprise Control Language (ECL), a declarative (like SQL and Pig), data-centric language.

- Like Hadoop, HPCC has a rich ecosystem of technologies. HPCC has two “systems” for processing and serving data: the Thor Data Refinery Cluster, and the Roxy Rapid Data Delivery Cluster. Thor is a data processor, like Hadoop. Roxie is similar to a data warehouse (like HBase) and supports transactions. HPCC uses a distributed file system.

- MapReduce-y solutions for Big Data without Hadoop:

- The Hadoop ecosystem (beautifully described in The Hadoop Ecosystem Table) has spawned a multitude of related projects that address one or more of Hadoop's shortcomings in a MapReduce-y way. This list is dynamic, as both it and the Hadoop ecosystem are still evolving rapidly. I've listed some of the leading contenders below, and you may see more detail on them in these pages in the future. Here are some of the most notable candidates:

Data is a rich world, and even this timestamped note will likely be outdated even by the time it's published. The most exciting part of the "Big Data" world is that "Big" is increasingly an oxymoron — ALL data is "big", and ever more powerful tools are appearing for all scales of data. Hadoop is a great tool, but in some aspects it has "2005" written all over it. Review the field, choose the tools for your needs, and…

"…go crazy — put it to a higher floor…"

John Repko | Comments Off |

John Repko | Comments Off |